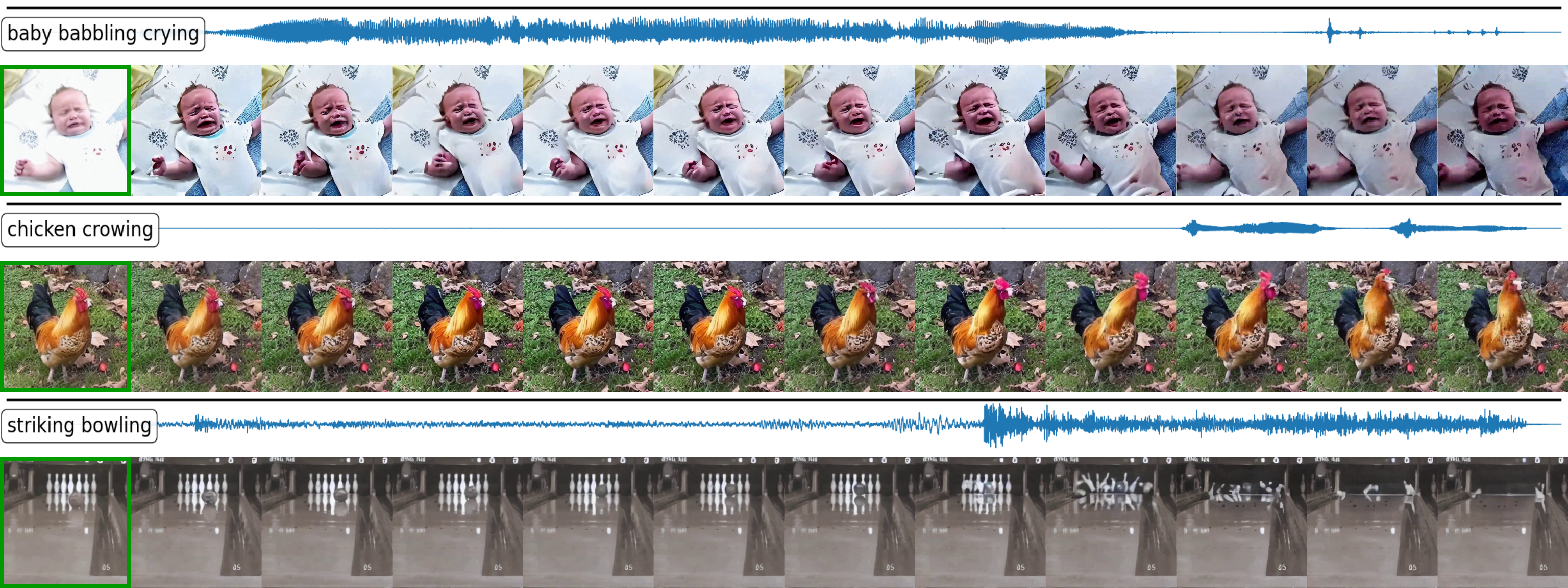

AVSync15 is a high-quality synchronized audio-video dataset curated from VGGSound. We carefully curate the dataset with both automatic and manual steps. It has the following attributes:

- High semantic and temporal audio-visual correlation: Audio and visual contents are not only semantically aligned, but also synchronized at each timestamp. Visual motions are mostly triggered by audio, vice versa.

- Clean and stable audio-visual contents: Visual motions are resulted from object dynamics as opposed to camera viewpoint changes and scene transitions. Frames are consistent to prevent sharp changes, while being dynamic to contain rich object motion. Audios are clean enough to describe the visual motion and exclude overwhelming out-of-scene sounds.

- Rich in audio-video synchronization clues: We remove the classes/videos where synchronization clue is minimal, i.e., shifting audio along time axis cannot be perceived when pairing it with the unshifted video. This removes ambient classes like raining, fire crackling, running fan, and videos with too complex audio-visual contents.

- Diverse in categories: It contains videos with duration > 2 seconds in 15 dynamic-motion classes. Each class is partitioned into 90 training videos and 10 testing videos.